In this blog, we dive into how MyScale achieves zero downtime during Kubernetes (k8s) upgrades for its replicated clusters. Hosted on the AWS cloud and utilizing Amazon EKS, MyScale offers a resilient and scalable Database-as-a-Service (DBaaS). We'll explore MyScale architecture's global and regional aspects, tackle the challenges of auto-scaling and Kubernetes upgrades, and outline our strategies for seamless user cluster migrations, all while ensuring uninterrupted service.

# Exploring MyScale's Architecture

MyScale (opens new window) is a Database-as-a-Service (DBaaS) built on the AWS cloud platform. All its services are deployed on AWS's managed Kubernetes service, Amazon EKS (opens new window), providing MyScale with the ability to fully utilize Kubernetes' powerful features, such as service discovery, load balancing, automatic scaling, and security isolation.

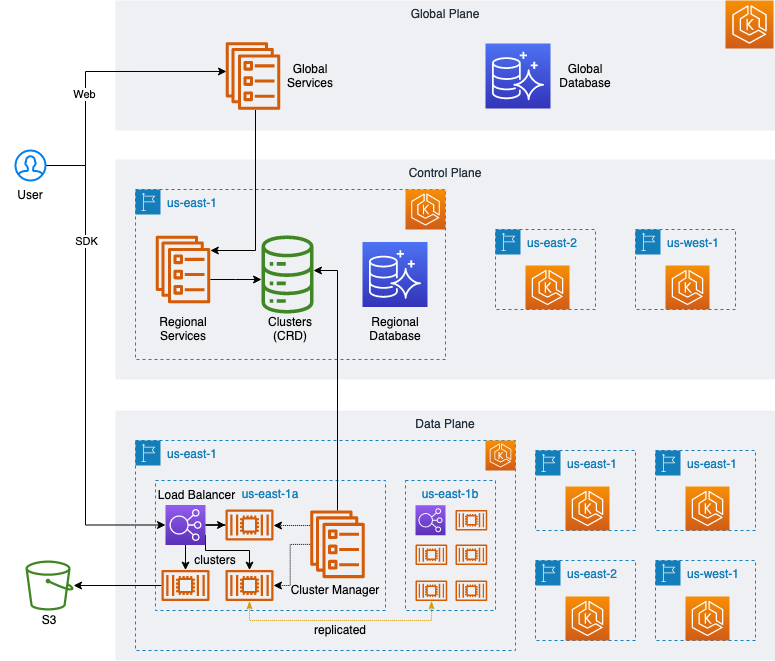

As shown in the diagram below, we have designed a highly elastic and scalable architecture with a global plane and web services (opens new window), user management, billing payments, etc. We have also multiple regional services located in different geographical locations where each regional service provides the same functions, like creating, managing, upgrading, and destroying user clusters, as well as usage monitoring and application interfaces.

Moreover, each regional service comprises a single control plane and multiple data planes.

Each region's control plane is its brain, responsible for task—or request—management and scheduling. It also serves as the link between the data plane and the global plane, accepting the management requests issued by the global plane and passing them on to be executed by the appropriate data plane. Furthermore, this control plane is responsible for collecting data and monitoring each data plane's status and detailed usage metrics, ensuring that user clusters are correctly run on the data plane.

Each plane corresponds to an independent K8s cluster, and under normal circumstances, creating and maintaining these clusters involves a large number of cloud resources—especially for creating and configuring Kubernetes clusters. We use Crossplane (opens new window) to manage infrastructure, applications, and user clusters efficiently in a unified way with Kubernetes Patterns.

Due to the different user cluster specifications, we have created different NodeGroups (opens new window) for individual user cluster specs and workloads to maximize resource utilization on the data plane. With the help of Cluster Autoscaler (opens new window), we have achieved automatic scaling of Kubernetes clusters.

apiVersion: eks.aws.crossplane.io/v1alpha1

kind: NodeGroup

metadata:

name: <eks-nodegroup-name>

spec:

forProvider:

region: us-east-1

clusterNameRef:

name: <eks-cluster-name>

subnets:

- subnet-for-us-east-1a

- subnet-for-us-east-1b

- subnet-for-us-east-1c

labels:

myscale.com/workload: db

myscale.com/instance-type: <myscale-instance-type>

taints:

- key: myscale.com/workload

value: db

effect: NO_EXECUTE

instanceTypes:

- <instance-type>

- <instance-type>

scalingConfig:

maxSize: 100

minSize: 3

# Addressing Autoscaling Challenges in Kubernetes

Using NodeGroups initially worked very well, but as the user cluster specifications expanded, we encountered two problems:

- NodeGroup does not distribute evenly across the available zones as expected but gradually concentrates user clusters in one of the available zones when creating, deleting, starting, and stopping these clusters.

- Each NodeGroup minimum size (

minSize) element must always be non-zero. If, in some cases, it is set to zero, like when a pod uses an existing PVC, the automatic expansion will not trigger, and the pod will remain permanently pending.

To find a solution to these issues, we found reference to this phenomena when studying the Cluster Autoscaler logs as well as the following documents:

Logs:

Found multiple availability zones for ASG "eks-nodegroup-xxxxxxx-90c4246a-215b-da2a-0ce8-c871017528ab"; using us-east-1a for failure-domain.beta.kubernetes.io/zone label

Documents:

- https://aws.github.io/aws-eks-best-practices/cluster-autoscaling/#scaling-from-0 (opens new window)

- https://github.com/kubernetes/autoscaler/blob/master/cluster-autoscaler/cloudprovider/aws/README.md#auto-discovery-setup (opens new window)

Following the best practices in the reference docs, we modified the original multi-availability zone in the NodeGroup to represent multiple single-availability zone NodeGroups. Additionally, the scale from zero was implemented by configuring ASG tags.

The configuration is described in the following YAML script:

apiVersion: eks.aws.crossplane.io/v1alpha1

kind: NodeGroup

metadata:

name: <eks-nodegroup-name-for-az1>

spec:

forProvider:

region: us-east-1

clusterNameRef:

name: <eks-cluster-name>

subnets:

- subnet-for-us-east-1a

labels:

myscale.com/workload: db

myscale.com/instance-type: <myscale-instance-type>

tags:

k8s.io/cluster-autoscaler/node-template/label/topology.kubernetes.io/region: us-east-1

k8s.io/cluster-autoscaler/node-template/label/topology.kubernetes.io/zone: us-east-1a

k8s.io/cluster-autoscaler/node-template/label/topology.ebs.csi.aws.com/zone: us-east-1a

k8s.io/cluster-autoscaler/node-template/taint/myscale.com/workload: db:NO_EXECUTE

k8s.io/cluster-autoscaler/node-template/label/myscale.com/workload: db

k8s.io/cluster-autoscaler/node-template/label/myscale.com/instance-type: <myscale-instance-type>

taints:

- key: myscale.com/workload

value: db

effect: NO_EXECUTE

instanceTypes:

- <instance-type>

- <instance-type>

scalingConfig:

maxSize: 100

minSize: 0

# Navigating Upgrades to Kubernetes

We were soon tasked with upgrading our K8s clusters because of AWS's deprecation notice for EKS 1.24. Consequently, the challenge was to upgrade to the latest version before the support deadline.

According to the release calendar (opens new window), AWS will then provide support for these new versions within fourteen months of their release date.

We tested AWS's upgrade process based on the documentation they provided:

- https://docs.aws.amazon.com/eks/latest/userguide/update-cluster.html (opens new window)

- https://docs.aws.amazon.com/eks/latest/userguide/update-managed-node-group.html (opens new window)

- https://docs.aws.amazon.com/eks/latest/userguide/managed-node-update-behavior.html (opens new window)

When testing, we came across the following issues:

- It is not possible to upgrade multiple versions simultaneously; only one version can be upgraded at a time, and a maximum of three versions can be upgraded in a year.

- The NodeGroup upgrade time is relatively long. A single NodeGroup takes tens of minutes with pods migrated or forcibly migrated.

Frequent version upgrades, coupled with long upgrade times and forced pod migration, could potentially cause the abnormal interruption of existing network connections, significantly impacting the data plane's user clusters and forcing users to pay attention to the system status (opens new window) to deal with possible issues.

To find solutions to these challenges, we studied the relevant AWS EKS docs and found the following helpful information:

- The EKS control plane and NodeGroup can differ by one version. Beginning from EKS 1.28, a difference of two versions is allowed.

- After the EKS control plane is upgraded, a NodeGroup with the control plane's version can be created to coexist with the old NodeGroup that has not been upgraded.

Based on these points, we made the following changes:

- We added an

instance-versionlabel to the existing NodeGroup's configuration:

labels:

myscale.com/instance-version: v1.24

- Then, we upgraded the EKS control plane:

apiVersion: eks.aws.crossplane.io/v1beta1

kind: Cluster

metadata:

name: <eks-cluster-name>

spec:

forProvider:

version: "1.25"

After completing the EKS control plane upgrade, we won't upgrade the old/existing NodeGroup. Instead, we create a new NodeGroup based on the upgraded EKS control plane version and add a label to mark the version.

labels:

myscale.com/instance-version: v1.25

Subsequently, we added the following configuration to the cluster controller to support scheduling user clusters during the upgrade version's transition period:

node_group:

default: v1.25

allowed:

- "v1.25"

- "v1.24"

Now, for newly created user clusters, or when users actively trigger version upgrades, restarts, etc., they are scheduled to the NodeGroup marked as v1.25 by default, while other clusters remain unchanged. We have solved the problem of pod migration during the EKS upgrade, making it transparent to users.

Note that EKS 1.28 supports a version deviation of two versions—for 1.26 and above—we can upgrade the EKS control plane by two consequence versions starting from EKS 1.26, thereby reducing the number of upgrades in half.

# Strategizing User Cluster Upgrades

However, some user clusters are still running on the old v1.24 NodeGroup.

When will these clusters be migrated?

Before discussing this issue, let's first categorize user clusters as follows:

- Multi-replica user clusters

- Single-replica user clusters

The replicas for a multi-replica cluster are located on multiple nodes in different availability zones; new user requests can be scheduled to other pods by the load balancer so that the pods to be migrated no longer handle new user requests. Once the existing requests are processed, the pod can be migrated. The user cluster migration can be completed by repeating this process until all the pods in a multi-replica user cluster have been migrated.

However, the single-replica user cluster type only contains a single pod; the multi-replica user cluster logic cannot be applied.

Therefore, how do we handle this migration? Can we refer to and reuse the multi-replica user clusters method?

When MyScale's replica mechanism expands the cluster—and increases replicas—the newly created replicas automatically synchronize data from the existing replicas with multiple replicas providing services simultaneously after the data synchronization is complete.

Therefore, the only thing we need to do is configure the user cluster to be migrated.

apiVersion: db.myscale.com/v1alpha1

kind: Cluster

metadata:

name: <cluster-name>

spec:

replicas: 1

shards: 1

......

Expand before migration from a single replica to a double replica:

apiVersion: db.myscale.com/v1alpha1

kind: Cluster

metadata:

name: <cluster-name>

spec:

replicas: 2

shards: 1

......

Then, reuse the migration logic of the multi-replica user clusters to perform the migration. After the migration has finished, scale down and delete the extra pods.

Finally, all the user clusters have been migrated. The pods on the nodes on the old NodeGroup have all been migrated. The Cluster Autoscaler automatically deletes the empty nodes in the old NodeGroup. We only need to check and delete the NodeGroup with a node size of zero. This process has zero impact on users, with EKS also fully upgraded to the new version.

# In Conclusion

Our journey through these architectural challenges and upgrades has increased MyScale's robustness and efficiency and ensured zero downtime during critical Kubernetes upgrades. Lastly, this journey reflects our commitment to providing our users with a seamless, high-performance DBaaS experience.